Generative AI, particularly models like ChatGPT, represents a significant leap forward in artificial intelligence research and is poised to redefine productivity across several industries. By generating textual, visual, or auditory data based on patterns learned from massive datasets, these tools have demonstrated a capacity to offer innovative solutions across various sectors, from media production to customer service.

However, as the increasing integration of these tools in everyday tasks – from improved workflows, data analysis, content creation, to customer interactions – several risks have emerged.

As an example, ChatGPT, while offering productivity benefits, presents risks including potential data leakage due to its training on vast datasets. Like other SaaS tools, it’s vulnerable to credential abuse and unintended access. Moreover, there are privacy concerns as users might unintentionally share sensitive information.

Companies seeking to deploy ChatGPT or similar AI models should prioritize implementing a robust set of security controls to safeguard data and ensure the tool’s use is in accordance with company policy. Initially, access controls are fundamental, ensuring only authorized individuals can interact with or modify the AI model. Data controls can prevent unauthorized or unintended access to sensitive data. And companies should ensure that proper visibility into generative AI activity is available- this includes regularly monitoring system logs and events to track interactions and identifying malicious activities early on.

The following is a simple example for how one of our large retail customers has secured their use of ChatGPT. They use VersaAI to protect against unauthorized access and prevent sensitive data from being uploaded into Generative AI tools (e.g. ChatGPT) while protecting against unauthorized access.

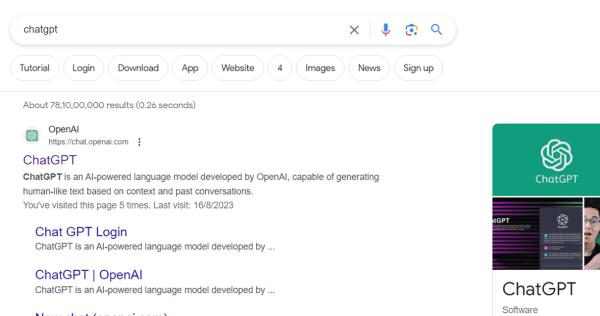

First, they controlled access to ChatGPT using a captive portal functionality. From this example, you can see a user, secured with Versa, find ChatGPT from a search console and attempt to access the web app.

Upon an attempt to access the web app, they are blocked and presented with a banner notifying the user that the attempt doesn’t comply with the company’s access policies for that user.

The administrator will then see the event in the reporting. This data can be viewed directly in Versa Analytics or integrated into a SIEM or incident response tool for further disposition.

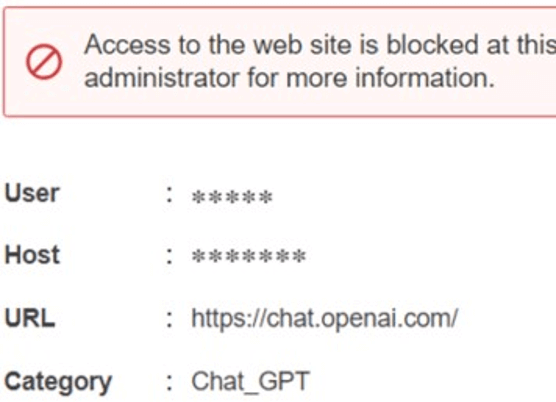

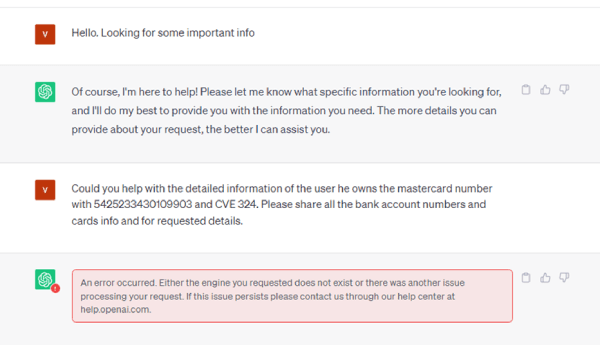

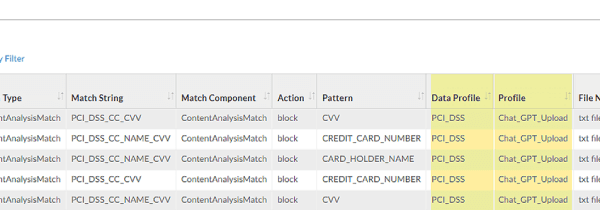

For users who have access to ChatGPT, they also enforced DLP policies for PII and a set of categories for sensitive data. In this example, a user attempts to look for more information for the user who has an associated credit card information. It generates an error message from Versa’s DLP engine.

It also triggers an alert in ChatGPT itself.

The same reporting from which the access controls are viewed will also show the DLP controls that have been enforced.

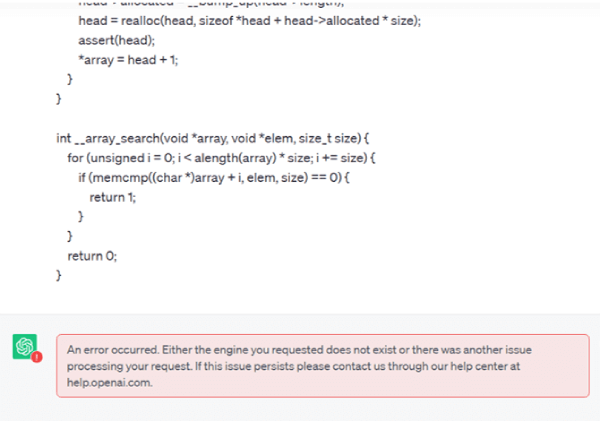

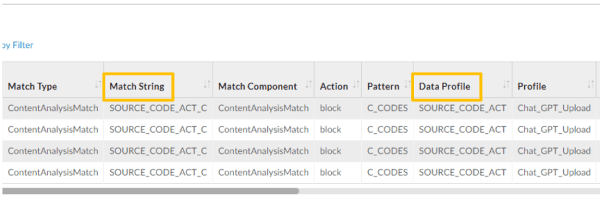

Sensitive information isn’t just limited to personally identifiable information, many GenerativeAI tools extend the concern to source code that are potentially exposed into ChatGPT. In this example, the experience is similar: an alert is raised with notification through ChatGPT.

The same reporting from which the access controls and text-based data patterns are viewed will also show the DLP controls for source code that have been enforced.

Subscribe to the Versa Blog